01

01Trending Now

01

01 02

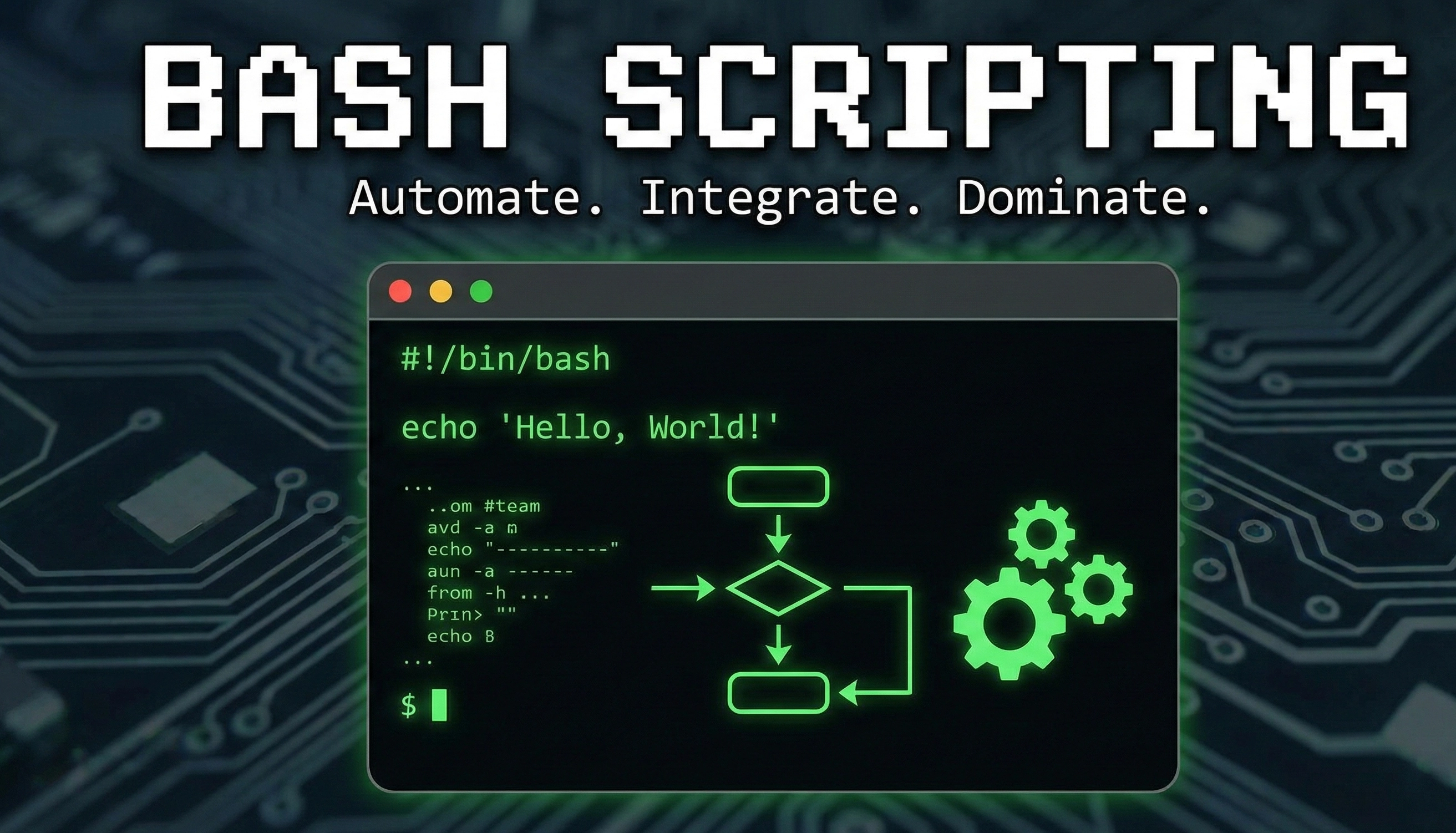

02WCWS CMS

03

03Automate Virtual Machine Creation in Proxmox with Cloud-Init: Complete Guide

04

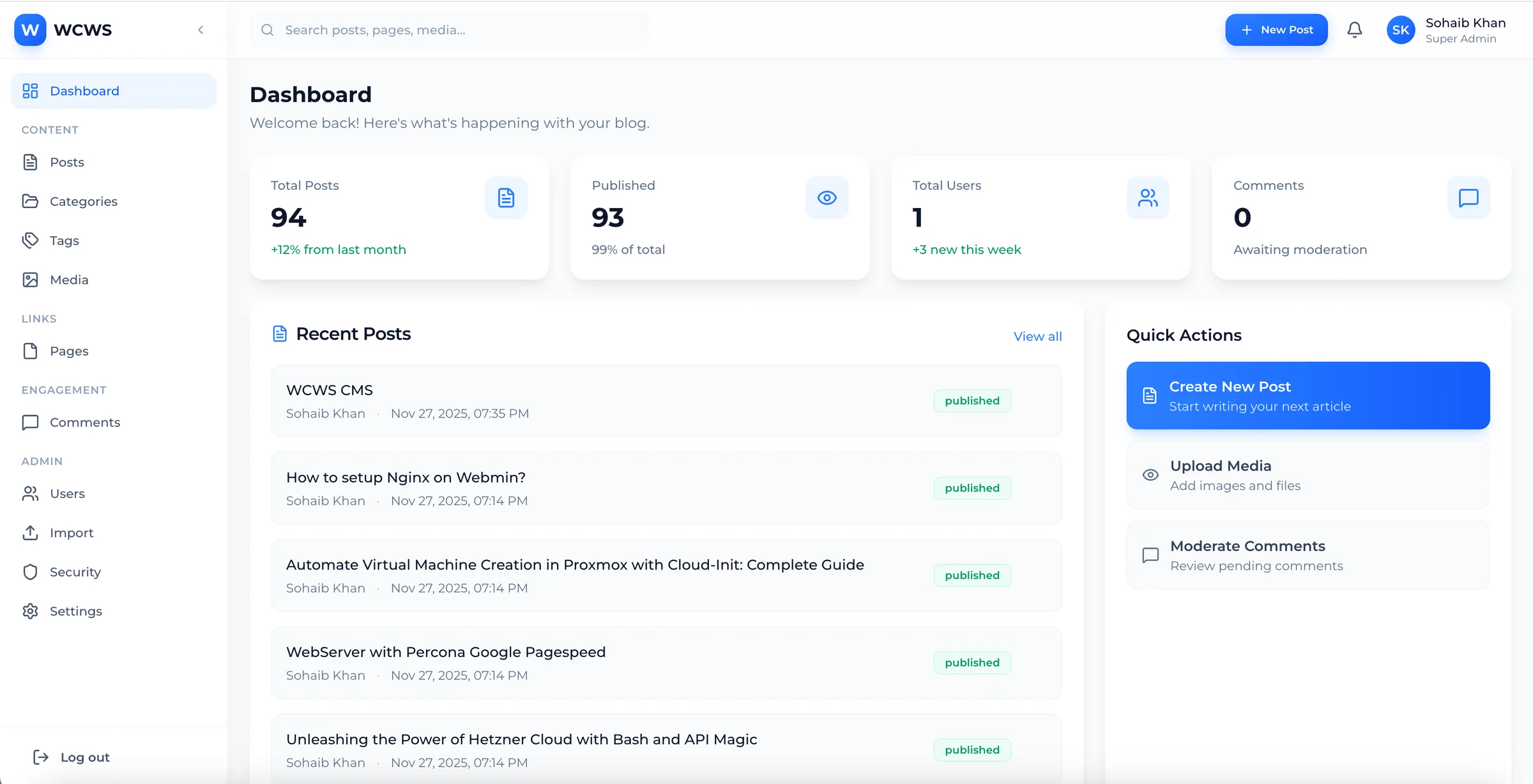

04Building the Future of Voice: My Journey Creating AI Voice Agents

Latest Articles

DocFlux 2.0: A PDF Knowledge Base

AI Phone System

In today’s fast-paced world, seamless communication is more crucial than ever. Whether it’s connecting with customers or managing internal calls, the ability to...

DocFlux Intelligence: A Deep Dive into an Advanced Document Analysis System

Web Whisper: Breaking Free from Desktop Constraints

AI Interview Assistant

In a world where technology streamlines processes, the human touch remains essential, especially in interviews. You might wonder how an AI Interview Assistant c...

How to Install Bitninja for Cloudpanel Control for Server Security

Introduction Securing your server is a critical aspect of maintaining a robust and reliable online presence. Bitninja, a comprehensive security solution, provid...

Automate Service Monitoring with a Bash Script and Systemd

Keeping your essential services running smoothly is crucial for any server. Ensuring that services like Nginx and MariaDB are always up and running can be a dau...

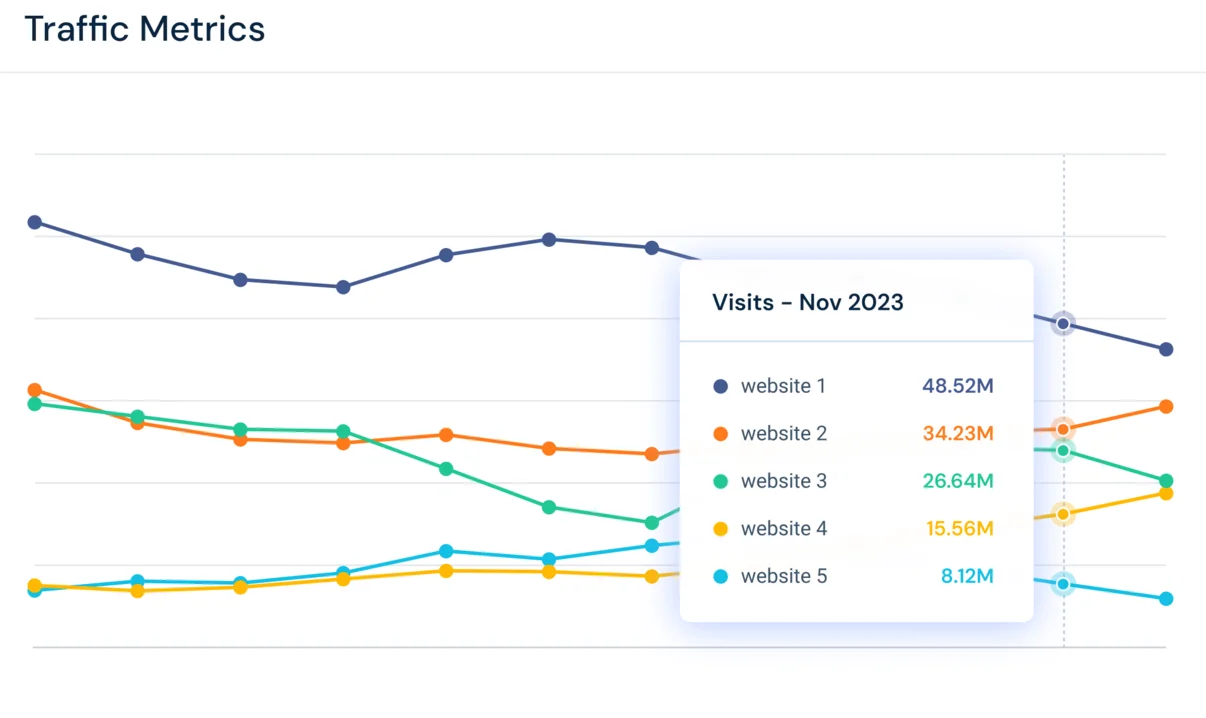

Monitoring Website Traffic with a Simple Script

In the world of website management, keeping track of traffic is essential. Monitoring access logs can help you understand the volume of traffic and identify pot...

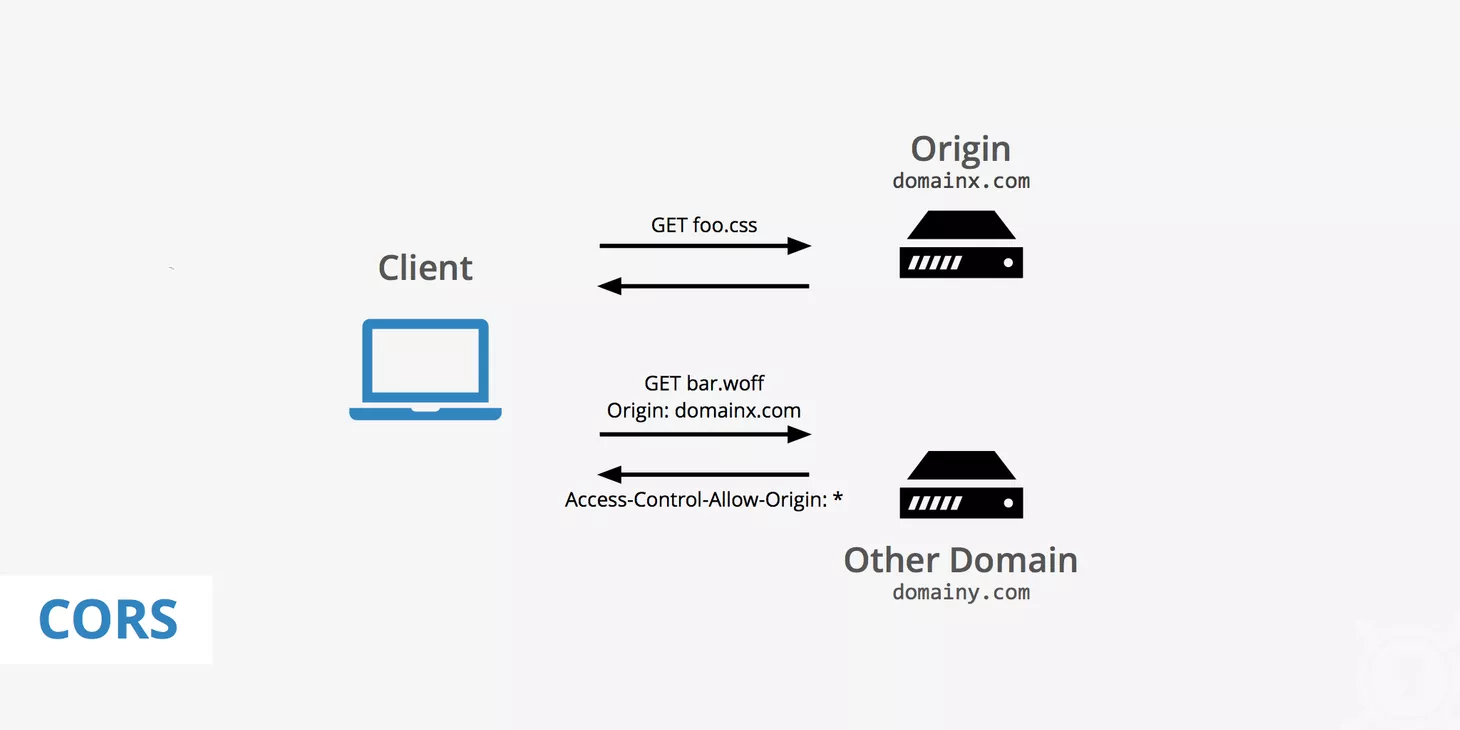

Battling the Cross-Origin Request Blocked Journey from Frustration to Triumph

We've all been there. You're managing your website, everything's running smoothly, and then—bam! You decide to change your domain from https://www.mydomain.com...

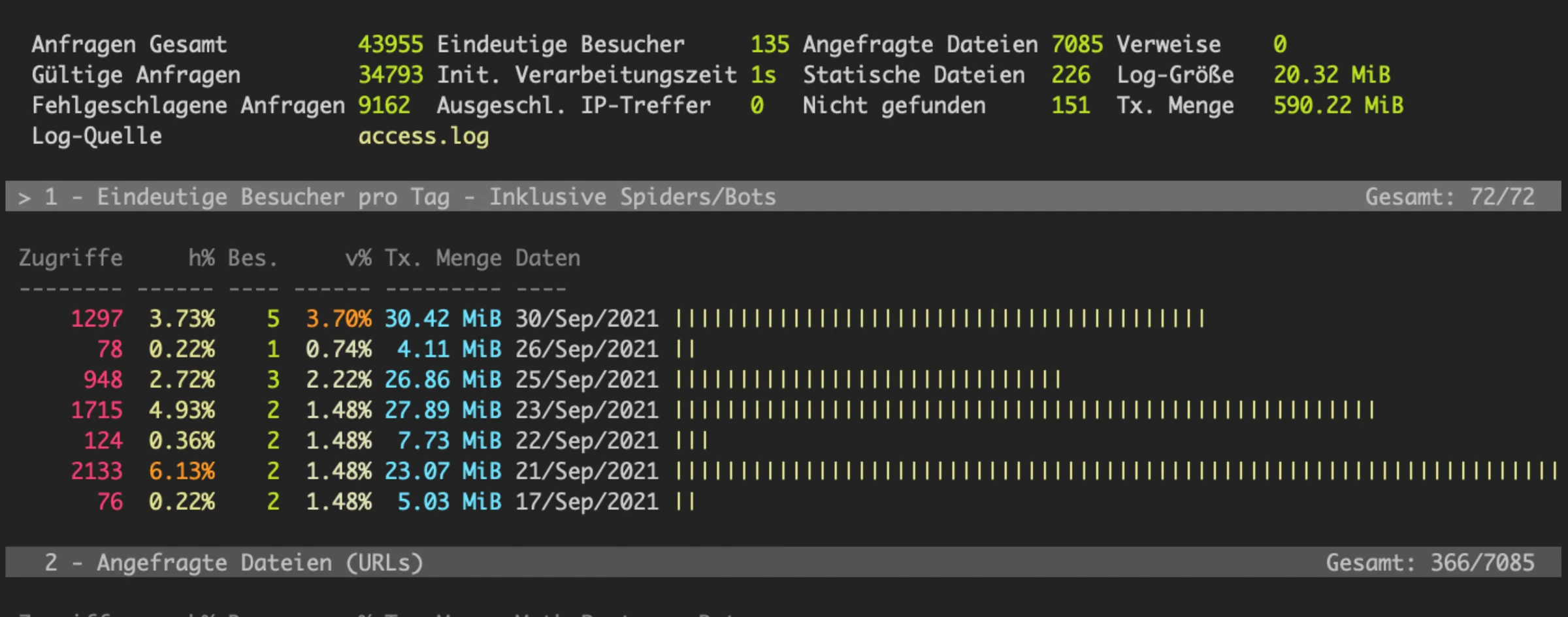

Understanding access.log and Its Importance

Your server's access.log file is a crucial component for maintaining the health and performance of your website. This log file records every request made to you...

Page 1 of 9 • 95 articles